Almost every application requires, or at least benefits greatly from, some kind of logging of events and errors. I suspect all of us have written custom code for logging to text files, database tables, the event log and other formats. We all know that we shouldn’t do this since there are several frameworks out there to help us, such as Microsoft’s own Tracing functionality, NLog and log4net. The obstacle to using them is often that it can be difficult to know how to configure them to work properly which is why this post will describe a set of configuration examples for log4net to make that framework do it’s magic.

For those of you new to log4net it’s a really useful package but somewhat difficult to configure, which is what I will try to help with in this post. I will not try to write a tutorial for it since others have already done so. Instead I will show a set of confiuration examples I personally find useful.

To use log4net, you need to add a reference to log4net.dll. Then a new section in the application’s config file must be added:

<section name="log4net" type="log4net.Config.Log4NetConfigurationSectionHandler, log4net" />

Log4net logging is based on a provider-like design where each type of logging is done in a so called appender. There are appenders for file system files, databases, etc. and it’s in these appenders that log4net’s power lies.

To configure which appenders to use it easiest to use the root configuration element like this:

<log4net>

...

<root>

<level value="Debug"/>

<appender-ref ref="ColoredConsoleAppender"/>

<appender-ref ref="RollingFileAppender"/>

</root>

</log4net>

We haven’t configured the referenced appenders yet, so that is the next step. The remainder of the post will be about some of the appenders that comes with log4net.

APPENDERS

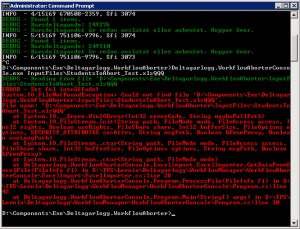

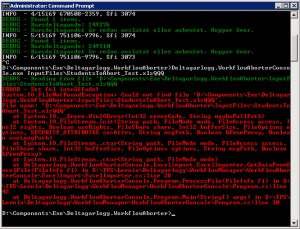

Colored console appender

This appender is very useful for console applications and enables different types of events (debug, info, error, etc) to be displayed in different colors.

Here’s how to configure it:

<appender name="ColoredConsoleAppender" type="log4net.Appender.ColoredConsoleAppender">

<mapping>

<level value="ERROR"/>

<foreColor value="Red, HighIntensity"/>

</mapping>

<mapping>

<level value="INFO"/>

<foreColor value="White"/>

</mapping>

<mapping>

<level value="DEBUG"/>

<foreColor value="Green"/>

</mapping>

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%-5level - %message%newline"/>

</layout>

</appender>

RollingFileAppender

This appender is used to write to a file while limiting the file size. When the maximum file size is reached then a new file is created which means that no log file will be unmanagably large.

<appender name="RollingFileAppender" type="log4net.Appender.RollingFileAppender">

<file value="d:\Logs\WorkflowAborter.log"/>

<rollingStyle value="Size" />

<appendToFile value="true"/>

<maximumFileSize value="2000KB"/>

<maxSizeRollBackups value="5"/>

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%date [%thread] %-5level %identity %property{log4net:HostName}: %message%newline%exception"/>

</layout>

</appender>

The maxSizeRollBackups setting controls how many files will be created before the first one is deleted.

You can control when log4net creates a new file (rolls the file) by setting the rollingStyle attribute. It’s default setting is Composite which combines date and size limitations, whichever occurs first, to decide when a new file is created. The other possible values are Size and Date which are self-explanatory, and Once which creates a new log file for every time the application is executed.

Note however that, for some reason, the maxSizeRollBackups setting does not work when using Composite or Date so the number of files cannot be limited in when using those settings.

NetOutputDebugStringAppender

Logs events using the OutputDebugString Win32 API call whose output is shown in the Visual Studio output window. The appender is also useful in deployed applications since the output is captured by Mark Russinovich’s DebugView tool. (Just remember to enable “Capture Global Win32” setting of DebugView first, or else nothing will be displayed!)

<appender name="NetOutputDebugStringAppender" type="log4net.Appender.OutputDebugStringAppender" >

<layout type="log4net.Layout.PatternLayout">

<param name="ConversionPattern" value="Butler: [%t] %-5p - %m%n" />

<!--<conversionPattern value="%date [%thread] %-5level %logger [%property{NDC}] - %message%newline" />-->

</layout>

</appender>

NetTraceAppender

This appender logs to the Microsofts standard tracing framework in .Net. In Visual Studio, output is shown in the output window by default and for deployed applications it’s possible to configure the tracing to store events in different destinations. The appender could be useful if parts of an application is using the tracing framework and other parts are using log4net since it makes it possible to channel all events through the same framework (trace) but this is probably not used very often.

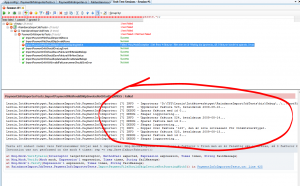

A more useful side effect is that many test runners (e.g. nUnit and ReSharper’s built-in test runner) captures trace calls and stores them with the test result making test failure analysis much easier.

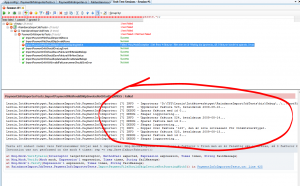

Screen shot of ReSharper with log4net trace output

Configuration:

<!-- Logga till .Net trace-listeners, t.ex- Outputfönstret i Visual Studio...-->

<!-- Hamnar i output-fönstret om körs i VS, kan annars visas i DebugView -->

<appender name="NetTraceAppender" type="log4net.Appender.TraceAppender, log4net">

<layout type="log4net.Layout.PatternLayout,log4net">

<param name="ConversionPattern" value="%d [%t] %-5p - %m%n" />

</layout>

</appender>

SmtpAppender

This appender is often overlooked, I have at least not seen many references to using it. It’s very useful for sending mail when an error occurs:

<appender name="SmtpAppender" type="log4net.Appender.SmtpAppender">

<to value="foo@foobar.se, bar@foobar.se" />

<from value="helpdesk@foobar.se" />

<subject value="Error occured in MyApp" />

<smtpHost value="smtp.foobar.se" />

<bufferSize value="512" />

<lossy value="true" />

<evaluator type="log4net.Core.LevelEvaluator">

<threshold value="WARN"/>

</evaluator>

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%n%d %-5level [%t] - %m" />

</layout>

</appender>

Using configuration similar to the above, a mail such as the following is sent when a WARN or ERROR log occurs:

2011-02-04 03:00:06,958 INFO [1] - ###############################################

2011-02-04 03:00:07,328 INFO [1] - Startar...

2011-02-04 03:00:07,362 INFO [1] - ###############################################

2011-02-04 03:00:07,429 INFO [1] - Läser upp kursdeltagare

2011-02-04 03:00:31,265 INFO [1] - 6437 ska bearbetas.

2011-02-04 03:21:17,153 INFO [1] - Timmar per vecka omräknade

2011-02-04 03:21:17,263 INFO [1] - Tidsåtgång timmar per vecka: 00:21:09

2011-02-04 03:21:17,278 INFO [1] - ----------------------------------------------------------------------------

2011-02-04 03:21:17,479 INFO [1] - Läser upp kursdeltagare

2011-02-04 03:22:08,793 INFO [1] - 26532 ska bearbetas.

2011-02-04 04:10:28,050 ERROR [1] - Kunde inte ändra preliminärt slutdatum för timpott för sfi kd 24085 (Butler kd 83237)

Note that the 10 events prior to the ERROR event that triggered the mail is also included which makes error analysis very much easier.

MemoryAppender

This appender is useful for automated tests.

<appender name="unitTestMemoryAppender" type="log4net.Appender.MemoryAppender">

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%date [%thread] %-5level %identity %property{log4net:HostName} %logger: %message%newline%exception"/>

</layout>

</appender>

To create unit tests that checks whether logging is performed correctly, code such as this can be used:

using ActiveSolution.Lernia.Utils.Logging;

using log4net;

using log4net.Appender;

using log4net.Core;

using log4net.Repository.Hierarchy;

using NUnit.Framework;

namespace UtilsTests

{

[TestFixture]

public class LoggingTests

{

[SetUp]

public void SetUp()

{

var appender = GetAppender();

if (appender != null) // Appender kommer vara null om inet loggats ännu.

{

appender.Clear();

}

}

[Test]

public void Logger_ErrorFormat_WithException_ShouldLogMessage()

{

// Arrange -----------------------------

var ex = new ArgumentNullException("foo");

// Act ---------------------------------

LogHelper.ErrorFormat(ex, "Oväntat fel för id = {0}", 42); // Call custom wrapper function for log4net

// Assert ------------------------------

var loggedEvents = GetLoggedEvents();

Assert.That(loggedEvents.Length, Is.EqualTo(1));

Assert.That(loggedEvents[0].RenderedMessage, Contains.Substring("Oväntat fel för id = 42"));

Assert.That(loggedEvents[0].RenderedMessage, Contains.Substring("Value cannot be null"));

Assert.That(loggedEvents[0].RenderedMessage, Contains.Substring("Parameter name: foo"));

}

private static LoggingEvent[] GetLoggedEvents()

{

MemoryAppender ma = GetAppender();

return ma.GetEvents();

}

private static MemoryAppender GetAppender()

{

var h = LogManager.GetRepository() as Hierarchy;

return h.Root.GetAppender("unitTestMemoryAppender") as MemoryAppender;

}

}

}

AdoAppender

The AdoAppender is used to store events in a database. Here’s a configuration example for using a stored procedure to store the events:

<appender name="ErrorHandlerAppender" type="log4net.Appender.AdoNetAppender">

<filter type="log4net.Filter.LevelRangeFilter">

<param name="LevelMin" value="WARN" />

</filter>

<bufferSize value="1" />

<connectionType value="System.Data.SqlClient.SqlConnection, System.Data, Version=1.0.3300.0, Culture=neutral, PublicKeyToken=b77a5c561934e089" />

<connectionString value="Data Source=XXXXXXXXX;Initial Catalog=Errorhand;User ID=XXX;Password=XXX" />

<commandType value="StoredProcedure" />

<commandText value="ap_errorhandler_ins" />

<parameter>

<parameterName value="@APPLICATIONID" />

<dbType value="String" />

<size value="16"/>

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="Butler.EducationSI" />

</layout>

</parameter>

<parameter>

<parameterName value="@USERID" />

<dbType value="String" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%identity" />

</layout>

</parameter>

<parameter>

<parameterName value="@SEVERITY" />

<dbType value="String" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%level" />

</layout>

</parameter>

<parameter>

<parameterName value="@DESCRIPTION" />

<dbType value="String" />

<size value="4000"/>

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%message%newline%exception" />

</layout>

</parameter>

<parameter>

<parameterName value="@LOCAL_ADDR" />

<dbType value="String" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%property{log4net:HostName}" />

</layout>

</parameter>

<parameter>

<parameterName value="@REMOTE_ADDR" />

<dbType value="String" />

<layout type="log4net.Layout.PatternLayout">

<conversionPattern value="%property{log4net:HostName}" />

</layout>

</parameter>

</appender>

The configuration segment for AdoAppenders tend to be rather long but the example is fairly self explaining. For more so called “conversionPattern fields” to use in parameters, look here. These are the same fields used in the other appenders to format the log strings. There’s quite a lot of interesting data that can be retrieved so make sure to have a look at the list.

FILTERING

Another powerful feature of log4net is the possibility of filtering. For example, if you’re referencing a 3rd party assembly such as NHibernate.dll that logs its events using log4net then those events can be configured separately like this:

<logger name="NHibernate">

<level value="Error" />

</logger>

This tells log4net that DEBUG and INFO events from NHibernate should be ignored. The logger name is a string with the start of the logger names the filter should match. Other loggers can be configured in the same way and loggers that aren’t configured fall back to the root logger we defined in the beginning of this post.

TROUBLESHOOTING

Q: What do you do when having trouble with log4net logging?

A: You enable internal logging!

<appSettings>

<add key="log4net.Internal.Debug" value="true"/>

</appSettings>

Note that these events are logged using the System.Diagnostics.Trace system so that may have to be configured as well. For more info about internal logging, see here.

That’s all for now. I’ll keep updating this post when I find more interesting settings.

/Emil